CS224n/assignment3/: Window-based Name Entity Recognition (Baseline Model)

Introduction

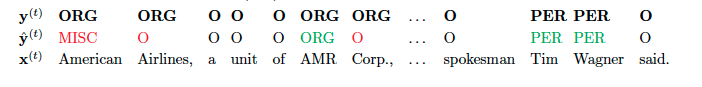

This assignment built 3 different models for named entity recognition (NER) task. For a given word in a context, we want to predict the name entity of the word in one of the following 5 categories:

- Person (PER):

- Organization (ORG):

- Location (LOC):

- Miscellaneous (MISC):

- Null (O): the word do not represent a named entity and most of the words fall into this categroy.

This is a 5-class classification problem, which implies a label vector of [PER, ORG, LOC, MISC, O]. Example as follow:

Window-based Model of NER (Baseline Model)

Let \(\mathbf{x} = [\mathbf{x}_1, \mathbf{x}_2, \dots, \mathbf{x}_T]\) be a sentence of length \(T\), where \(\mathbf{x}_t, t=1, 2, \dots,T\) is a one-hot vector of size of the vocabulary, representing the index of the word at position \(t\). To construct the windowed input on the raw input sentence \(\mathbf{x}\), given the window size \(w\), the windowed-input for the \(t\)th word in \(\mathbf{x}\) is \(\mathbf{x}^{t} = [\mathbf{x}_{t-w}, \dots, \mathbf{x}_{t}, \dots, \mathbf{x}_{t+w}]\). For the first word in \(\mathbf{x}\), the windowed-input is \(\mathbf{x}^{1} = [<start>,\dots, <start>, \mathbf{x}_{1}, \dots, \mathbf{x}_{1+w}]\), where the number of \(<start>\) is \(w\). Similarly, the the last word in \(\mathbf{x}\), the windowed-input is \(\mathbf{x}^{T} = [\mathbf{x}_{T-w},\dots, \mathbf{x}_{T}, <end>, \dots. <end>]\), where the number of \(<end>\) is \(w\). Each \(\mathbf{x}\) corresponds to the lables \(\mathbf{y} = [\mathbf{y}^1, \mathbf{y}^2, \dots, \mathbf{y}^T]\) of the same length \(T\), each \(\mathbf{y}^t\) is also a one-hot vector. When constructing the windowed input from \(\mathbf{x}\) for the word at \(t\), its corresponding label vector will be just \(\mathbf{y}^t\) and the label of the word is at index \(i\) in \(\mathbf{y}^t\), denoted by \(y_i^t\).

- Example: \(\mathbf{x} = [\mbox{Jim}_1 \mbox{ bought}_2 \mbox{ 300}_3 \mbox{ shares}_3 \mbox{ of}_4 \mbox{ Acme}_5 \mbox{ Corp}_5 \mbox{ in}_6 \mbox{ 2006}_7.]\), where \(T = 7\). Let \(w= 1\), then

\[\mathbf{x}^{1} = [<start>, \mbox{ Jim}, \mbox{ bought}], \mathbf{y}^1 = [1,0,0,0,0] \rightarrow{PER, \mbox{label of 'Jim'}}\] \[\dots\] \[\mathbf{x}^{7} = [\mbox{in}, \mbox{ 2006}, <end>], \mathbf{y}^7 = [0,0,0,0,1] \rightarrow{O, \mbox{label of '2006'}}\]

- Model: Using the windowed input \(\mathbf{x}^t\), want to predict the label, \(\mathbf{y}^t\), for the central word in \(\mathbf{x}^t\), i.e. the \(t\)th word in the raw input \(\mathbf{x}\).

Define \(\mathbf{E} \in \mathbb{R}^{V\times D}, \mathbf{W} \in \mathbb{R}^{D \times H}, \mathbf{U} \in \mathbb{R}^{H\times 5}, \mathbf{b}_1 \in \mathbb{R}^{1\times H}, \mathbf{b}_2 \in \mathbb{R}^{1\times 5}\), for the \(t\)th word in the raw input \(\mathbf{x}\), its windowed input is \(\mathbf{x}^t = [\mathbf{x}^{t-w}, \dots, \mathbf{x}^t, \dots, \mathbf{x}^{t+w}],\) the model for this \(\mathbf{x}^t\) and a window size \(w\) is

\[\begin{array}{rcl} \mathbf{e}^t &=& [\mathbf{x}^{t-w}\mathbf{E}, \dots, \mathbf{x}^t\mathbf{E}, \dots, \mathbf{x}^{t+w}\mathbf{E}]\\ \mathbf{h}^t &=& ReLU(\mathbf{e}^t\mathbf{W} + \mathbf{b}_1)\\ \hat{\mathbf{y}}^t &=& softmax(\mathbf{h}^t\mathbf{U}+\mathbf{b}_2)\\ J &=& \sum_tCE(\mathbf{y}^t, \hat{\mathbf{y}^t}) \\ &=& -\sum_t\sum_iy_i^t log(\hat{y_i^t}) \end{array}\]

- Code:

- Load and preprocess data

The first two (sentence, label) pairs from the data are

[(['EU', 'rejects', 'German', 'call', 'to', 'boycott', 'British', 'lamb', '.'],

['ORG', 'O', 'MISC', 'O', 'O', 'O', 'MISC', 'O', 'O']),

(['Peter', 'Blackburn'], ['PER', 'PER'])]After loading the data, a dictionary of ‘token to id’ (tok2id) is built:

{'eu': 1,

'rejects': 2,

'german': 3,

'call': 4,

'to': 5,

'boycott': 6,

'british': 7,

'lamb': 8,

'.': 9,

'peter': 10,

'blackburn': 11,

'CASE:aa': 11,

'CASE:AA': 12,

'CASE:Aa': 13,

'CASE:aA': 14,

'<s>': 15,

'</s>': 16,

'UUUNKKK': 17}In the dictionary, in addition to the word in each sentences, the 4 case types of the word (‘CASE:’) and the start (“\(<s>\)”), the end (“\(</s>\)”) are also added to the dictionary for later use.

Having the tok2id dictionary, each word is represented by a vector of [id, case type], each sentence is a list of [id, case type], and each sentence and its corresponding labels are in a tuple, in the example we have 2 sentences, so the train_data and dev_data returned from the load_and_preprocess_data are assigned to train and dev, and is a list of 2 tuples (here the train and dev are the same dataset):

[([[1, 12],

[2, 11],

[3, 13],

[4, 11],

[5, 11],

[6, 11],

[7, 13],

[8, 11],

[9, 14]],

[1, 4, 3, 4, 4, 4, 3, 4, 4]),

([[10, 13], [11, 13]], [0, 0])]train and dev returned from the load_and_preprocess_data are the raw data and assigned to train_raw and dev_raw, respectively, as

[(['EU', 'rejects', 'German', 'call', 'to', 'boycott', 'British', 'lamb', '.'],

['ORG', 'O', 'MISC', 'O', 'O', 'O', 'MISC', 'O', 'O']),

(['Peter', 'Blackburn'], ['PER', 'PER'])]helper returned from the load_and_preprocess_data is a class with two attributes: tok2id, max_length (in our example is 9).

The relation is

helper, train, dev, train_raw, dev_raw = return helper, train_data, dev_data, train, dev - Load embeddings

For each word, the word vector is of the order 50, the embedding matrix is of the shape 19*50 (the number of word in tok2dic is 18, in the function the rows in the embeddings +1, with the first vector all 0s),

embeddings = np.array(np.random.randn(len(helper.tok2id) + 1, EMBED_SIZE), dtype=np.float32)

embeddings[0] = 0.Since in data, we have a file of all vocabulary (vocab.txt, containing 100232 words) and a file with all word vectors (wordVector.txt, containing 100232 vectors), we then pair the \(i\)th word in the vocabulary with the \(i\)th vector using a function load_word_vector_mapping(), the first 10 records in the resulting ordered dictionary (ret) are

['UUUNKKK', 'the', ',', '.', 'of', 'and', 'in', '"', 'a', 'to'],

[array([.....size50]),array([.....size50]),.....,array([.....size50])]10 words (keys) matched with 10 arrays (values), and each array has order of 50, the embedding size.

For our 2-sentence tiny example, the word vectors of the 18 words in tok2id are found from ret, then constituting an embedding matrix for the 18 words

for word, vec in load_word_vector_mapping(args.vocab, args.vectors).items():

word = normalize(word)

if word in helper.tok2id:

embeddings[helper.tok2id[word]] = vec

embeddings.shape

Out[109]: (19, 50)- Making windowed input and output pair Each word in the two sentences are featurized as a vector in order of 2 [id, case_type]. The train data (also the dev data) is

In: train

Out: [([[1, 12],

[2, 11],

[3, 13],

[4, 11],

[5, 11],

[6, 11],

[7, 13],

[8, 11],

[9, 14]],

[1, 4, 3, 4, 4, 4, 3, 4, 4]),

([[10, 13], [11, 13]], [0, 0])]The start \(<s>\) and end \(</s>\) are featurized as

helper.START

[15,11]

helper.END

[16,11]Using the following code to make pair of ([windowed data], label) for each word

def make_windowed_data(data= train, start = helper.START, end = helper.END, window_size = 1):

windowed_data = []

for sentence, label in data:

l = len(sentence)

sentence_extended = [start]*window_size + sentence + [end]*window_size

for i in range(window_size, l+window_size):

temp = []

for j in range(i-window_size, i+window_size+1):

temp.extend(sentence_extended[j])

window_label = (temp, label[i-window_size])

windowed_data.append(window_label)

return windowed_data

make_windowed_data(train_data, helper.START, helper.END)The windowed data returned is

[([15, 11, 1, 12, 2, 11], 1),

([1, 12, 2, 11, 3, 13], 4),

([2, 11, 3, 13, 4, 11], 3),

([3, 13, 4, 11, 5, 11], 4),

([4, 11, 5, 11, 6, 11], 4),

([5, 11, 6, 11, 7, 13], 4),

([6, 11, 7, 13, 8, 11], 3),

([7, 13, 8, 11, 9, 14], 4),

([8, 11, 9, 14, 16, 11], 4),

([15, 11, 10, 13, 11, 13], 0),

([10, 13, 11, 13, 16, 11], 0)]- Define the window model

Build the model using tensorflow:

- add placeholders for input \(\mathbf{x}\), if window size =1, for the \(t\)th word, \(t=1, \dots, T\), \(\mathbf{x}^t \in \mathbb{R}^{3*D}, D=50\) so \(\mathbf{x} \in \mathbb{R}^{T, 3*D}\) , labels \(\mathbf{y}\), which is a vector of correct lables for the \(t\)th word, \(\mathbf{y} \in \mathbb{R}^{T*1}\), dropout is a scalar from 0 to 1:

def add_placeholders(self):

self.input_placeholder = tf.placeholder(tf.int32, [None, self.config.n_window_features])

self.labels_placeholder = tf.placeholder(tf.int32, [None,])

self.dropout_placeholder = tf.placeholder(tf.float32)- create feed dictionary for the placeholders

def create_feed_dict(self, inputs_batch, labels_batch=None, dropout=1):

feed_dict = {self.input_placeholder: inputs_batch, self.dropout_placeholder: dropout}

if labels_batch is not None:

feed_dict[self.labels_placeholder] = labels_batch

return feed_dictThe inputs_batch and labels_batch are obtained as follow:

(i). make window data and assigned the windowed_data pair for both train and dev as train_examples and dev_set:

def preprocess_sequence_data(self, examples):

return make_windowed_data(examples, start=helper.START, end=helper.END, window_size=config.window_size)

train_examples = self.preprocess_sequence_data(train)

dev_set = self.preprocess_sequence_data(dev)(ii). make minibatch on the train_examples: The train_examples (as well as dev_set) is

[([15, 11, 1, 12, 2, 11], 1),

([1, 12, 2, 11, 3, 13], 4),

([2, 11, 3, 13, 4, 11], 3),

([3, 13, 4, 11, 5, 11], 4),

([4, 11, 5, 11, 6, 11], 4),

([5, 11, 6, 11, 7, 13], 4),

([6, 11, 7, 13, 8, 11], 3),

([7, 13, 8, 11, 9, 14], 4),

([8, 11, 9, 14, 16, 11], 4),

([15, 11, 10, 13, 11, 13], 0),

([10, 13, 11, 13, 16, 11], 0)],consisting 11 windowed inputs. We want to make minibatch of these 11 inputs:

first, recombine inputs and labels by grouping inputs into one list and labels into another list

In: batches = [np.array(col) for col in zip(*train_examples)]

batches

Out: [array([[15, 11, 1, 12, 2, 11],

[ 1, 12, 2, 11, 3, 13],

[ 2, 11, 3, 13, 4, 11],

[ 3, 13, 4, 11, 5, 11],

[ 4, 11, 5, 11, 6, 11],

[ 5, 11, 6, 11, 7, 13],

[ 6, 11, 7, 13, 8, 11],

[ 7, 13, 8, 11, 9, 14],

[ 8, 11, 9, 14, 16, 11],

[15, 11, 10, 13, 11, 13],

[10, 13, 11, 13, 16, 11]]), array([1, 4, 3, 4, 4, 4, 3, 4, 4, 0, 0])]let

minibatch_size = 3

data_size = len(batches[0]) #data_size = 11shuffle the indices

In: indices = np.arange(data_size)

np.random.shuffle(indices)

indices

Out: array([ 7, 10, 8, 9, 4, 6, 2, 3, 5, 0, 1]) selecting the minibatches of minibatch_size according to the shuffled indices

for minibatch_start in np.arange(0, data_size, minibatch_size):

minibatch_indices = indices[minibatch_start:minibatch_start + minibatch_size]

print([d[minibatch_indices] if type(d) is np.ndarray for d in batches])the resulted minibatches from 11 inputs with batch size of 3 are

[array([[ 8, 11, 9, 14, 16, 11],

[ 6, 11, 7, 13, 8, 11],

[15, 11, 1, 12, 2, 11]]), array([4, 3, 1])]

[array([[ 1, 12, 2, 11, 3, 13],

[ 5, 11, 6, 11, 7, 13],

[ 4, 11, 5, 11, 6, 11]]), array([4, 4, 4])]

[array([[ 2, 11, 3, 13, 4, 11],

[10, 13, 11, 13, 16, 11],

[ 7, 13, 8, 11, 9, 14]]), array([3, 0, 4])]

[array([[ 3, 13, 4, 11, 5, 11],

[15, 11, 10, 13, 11, 13]]), array([4, 0])]where for each minibatch contains inputs_batch and labels_batch in create_feed_dict(). In the original code, it uses yield instead of print, so the training start one minibatch after another (train on the first minibatch, prog bar moves one step, then train on the next minibatch, prog bar moves on another step, and so on).

- add embedding matrix

In Step 2. load embeddings, we have a \(19\times50\) embedding matrix \(\mathbf{E}\). Since the inputs_batch are shuffled, so first look up the corresponding word vectors for each window input (\(\begin{bmatrix}\mathbf{x}^{t-w}\mathbf{E} \\ \mathbf{x}^{t}\mathbf{E}\\ \mathbf{x}^{t+w}\mathbf{E}\end{bmatrix}\)), then vectorize to be \(\mathbf{e}^t = [\mathbf{x}^{t-w}\mathbf{E}, \mathbf{x}^{t}\mathbf{E}, \mathbf{x}^{t+w}\mathbf{E}]\) of size \(1\times (3*2*50)\) (3 = # of words in a window, 2 = the number of features for a word [id, case type], D =50) in our example. This corresponds to the first equation in the neural network model defined in the beginning.

def add_embedding(self):

embedded = tf.Variable(self.pretrained_embeddings)

embeddings = tf.nn.embedding_lookup(embedded,self.input_placeholder)

embeddings = tf.reshape(embeddings, [-1, self.config.n_window_features * self.config.embed_size])

return embeddings- add hidden layer and the prediction

define variables \(\mathbf{b}_1, \mathbf{b}_2, \mathbf{W}, \mathbf{U}\)

define functions

def add_prediction_op(self):

x = self.add_embedding()

dropout_rate = self.dropout_placeholder

with tf.variable_scope("transformation"):

b1 = tf.get_variable(name='b1', shape = [self.config.hidden_size],initializer=tf.contrib.layers.xavier_initializer(seed=1))

b2 = tf.get_variable(name='b2', shape = [self.config.n_classes], initializer=tf.contrib.layers.xavier_initializer(seed=2))

W = tf.get_variable(name='W', shape = [self.config.n_window_features * self.config.embed_size,self.config.hidden_size],initializer=tf.contrib.layers.xavier_initializer(seed=3))

U = tf.get_variable(name='U', shape = [self.config.hidden_size, self.config.n_classes], initializer=tf.contrib.layers.xavier_initializer(seed=4))

z1 = tf.matmul(x,W) + b1

h = tf.nn.relu(z1)

h_drop = tf.nn.dropout(h, dropout_rate)

pred = tf.matmul(h_drop, U) + b2

return pred- define loss function and optimization method

def add_loss_op(self, pred):

loss = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=pred, labels=self.labels_placeholder)

loss = tf.reduce_mean(loss)

return loss

def add_training_op(self, loss):

adam_optim = tf.train.AdamOptimizer(self.config.lr)

train_op = adam_optim.minimize(loss)

return train_op- Fit the model

- initialize the window model

config = Config()

helper, train, dev, train_raw, dev_raw = load_and_preprocess_data(args)

embeddings = load_embeddings(args, helper)

model = WindowModel(helper, config, embeddings)

# model.pretrained_embeddings = embeddingswhere the helper and embeddings are introduced in step 1 and 2 above.

- fit the model

(i). the train_examples and dev_set are obtained above by using the make_windowed_data()

(ii). we start run 10 epochs to train the model,

def fit(self, sess, saver, train, dev):

train_examples = self.preprocess_sequence_data(train)

dev_set = self.preprocess_sequence_data(dev)

for epoch in range(self.config.n_epochs):

#logger.info("Epoch %d out of %d", epoch + 1, self.config.n_epochs)

score = self.run_epoch(sess, train_examples, dev_set, train, dev)for each epochs in run_epoch(), first get the minibatches of from train_examples with batch_size is 3 using minibatches() above, recall each yielded minibatch is [inputs_batch = array([[,,,,,],[,,,,,],[,,,,,]]), labels_batch = array([,,])].

Feed inputs_batch and labels_batch into create_feed_dict() within train_on_batch(). With the model.pretrained_embeddings, we add_embedding, add_prediction_op, add_loss_op and add_train_op to obtain the loss and update the parameters \(\mathbf{e}^t \in minibatch, \mathbf{W}, \mathbf{U}, \mathbf{b}_1, \mathbf{b}_2\). After train on the first minibatch, prog bar +1 and train on the secon minibatch and so on.

def train_on_batch(self, sess, inputs_batch, labels_batch):

feed = self.create_feed_dict(inputs_batch, labels_batch=labels_batch,

dropout=self.config.dropout)

_, loss = sess.run([self.train_op, self.loss], feed_dict=feed)

return loss

def run_epoch(self, sess, train_examples, dev_set, train_examples_raw, dev_set_raw):

prog = Progbar(target=1 + int(len(train_examples) / self.config.batch_size))

for i, batch in enumerate(minibatches(train_examples, self.config.batch_size)):

loss = self.train_on_batch(sess, *batch)

prog.update(i + 1, [("train loss", loss)])

if self.report: self.report.log_train_loss(loss)

print("")

logger.info("Evaluating on development data")

token_cm, entity_scores = self.evaluate(sess, dev_set, dev)

logger.debug("Token-level confusion matrix:\n" + token_cm.as_table())

logger.debug("Token-level scores:\n" + token_cm.summary())

logger.info("Entity level P/R/F1: %.2f/%.2f/%.2f", *entity_scores)

f1 = entity_scores[-1]

return f1To evaluate on the dev, first make window data using make_windowed_data within preprocess_sequence_data

dev_set = self.preprocess_sequence_data(self.helper.vectorize(dev))get the minibatches from dev_set within shuffle = False, the first minibatch is

[array([[15, 11, 1, 12, 2, 11],

[ 1, 12, 2, 11, 3, 13],

[ 2, 11, 3, 13, 4, 11]]), array([1, 4, 3])]get the inputs_batch for create_feed_dict() while labels_batch = None from the each minibatch to get the predictions for the each minibatch

def predict_on_batch(self, sess, inputs_batch):

feed = self.create_feed_dict(inputs_batch)

predictions = sess.run(tf.argmax(self.pred, axis=1), feed_dict=feed)

return predictions

for i, batch in enumerate(minibatches(dev_set, self.config.batch_size = 3, shuffle=False)):

# Ignore predict

batch = batch[:1] + batch[2:]

"""

batch = [array([[15, 11, 1, 12, 2, 11],

[ 1, 12, 2, 11, 3, 13],

[ 2, 11, 3, 13, 4, 11]])]

"""

preds_ = self.predict_on_batch(sess, *batch)

preds += list(preds_)

prog.update(i + 1, [])

consolidate_predictions(dev, inputs=dev_set, preds)where consolidata_predictions returns [[sentence1, labels, labels_], [sentence2, labels, labels_], …, [sentence11, labels, labels_]], labels_ is the predictions for the corresponding sentence (len(predictions) = 9 for the first sentence, len(predictions) = 2 for the second sentence), labels is the true labels for that sentence.

For each consolidated predictions [sentence, labels, labels_], compute the precison, recall and f1 scores:

token_cm = ConfusionMatrix(labels=LBLS)

correct_preds, total_correct, total_preds = 0., 0., 0.

for _, labels, labels_ in self.output(sess, examples_raw, examples):

for l, l_ in zip(labels, labels_):

token_cm.update(l, l_)

gold = set(get_chunks(labels))

pred = set(get_chunks(labels_))

correct_preds += len(gold.intersection(pred))

total_preds += len(pred)

total_correct += len(gold)

p = correct_preds / total_preds if correct_preds > 0 else 0

r = correct_preds / total_correct if correct_preds > 0 else 0

f1 = 2 * p * r / (p + r) if correct_preds > 0 else 0Results and Predictions

Train with “data/tiny.conll” as dev_raw and train_raw, which contains 721 sentences (the first 2 sentences are illustrated above).

def do_test2():

logger.info("Testing implementation of WindowModel")

config = Config()

#helper, train, dev, train_raw, dev_raw = load_and_preprocess_data(args)

#embeddings = load_embeddings(args, helper)

#config.embed_size = embeddings.shape[1]

with tf.Graph().as_default():

logger.info("Building model...",)

start = time.time()

model = WindowModel(helper, embeddings)

logger.info("took %.2f seconds", time.time() - start)

init = tf.global_variables_initializer()

saver = None

with tf.Session() as session:

session.run(init)

#[[IMPORTANT MAP]]: train_raw, dev_raw ---load_preprocess()--> train, dev--precess_sequence_data()-->train_examples, dev_set

model.fit(session, saver, train_examples, dev_set, train, dev)

output = model.output(session, dev_raw, dev_set) #list of list: [[sentence, labels, labels_]]

sentences, labels, predictions = zip(*output)

predictions = [[LBLS[l] for l in preds] for preds in predictions]

output = zip(sentences, labels, predictions)

print(sentences[0], labels[0], predictions[0])

with open("results/window/window_predictions.conll", 'w') as f:

write_conll(f, output)

logger.info("Model did not crash!")

logger.info("Passed!")#FIRST EPOCH

INFO:Epoch 1 out of 10

5/5 [==============================] - 0s - train loss: 1.4808

INFO:Evaluating on development data

5/5 [==============================] - 0s

DEBUG:Token-level confusion matrix:

go\gu PER ORG LOC MISC O

PER 134.00 57.00 84.00 6.00 543.00

ORG 52.00 29.00 25.00 5.00 280.00

LOC 47.00 36.00 70.00 4.00 420.00

MISC 28.00 10.00 27.00 5.00 188.00

O 167.00 93.00 81.00 14.00 6761.00

DEBUG:Token-level scores:

label acc prec rec f1

PER 0.89 0.31 0.16 0.21

ORG 0.94 0.13 0.07 0.09

LOC 0.92 0.24 0.12 0.16

MISC 0.97 0.15 0.02 0.03

O 0.81 0.83 0.95 0.88

micro 0.91 0.76 0.76 0.76

macro 0.91 0.33 0.27 0.28

not-O 0.93 0.24 0.12 0.16

INFO:Entity level P/R/F1: 0.09/0.06/0.07

...

#LAST EPOCH

INFO:Epoch 10 out of 10

5/5 [==============================] - 0s - train loss: 0.3210

INFO:Evaluating on development data

5/5 [==============================] - 0s

DEBUG:Token-level confusion matrix:

go\gu PER ORG LOC MISC O

PER 670.00 40.00 33.00 14.00 67.00

ORG 133.00 96.00 41.00 19.00 102.00

LOC 41.00 25.00 402.00 16.00 93.00

MISC 59.00 24.00 46.00 52.00 77.00

O 95.00 44.00 43.00 23.00 6911.00

DEBUG:Token-level scores:

label acc prec rec f1

PER 0.95 0.67 0.81 0.74

ORG 0.95 0.42 0.25 0.31

LOC 0.96 0.71 0.70 0.70

MISC 0.97 0.42 0.20 0.27

O 0.94 0.95 0.97 0.96

micro 0.95 0.89 0.89 0.89

macro 0.95 0.63 0.59 0.60

not-O 0.96 0.64 0.60 0.62

INFO:Entity level P/R/F1: 0.49/0.53/0.51The results are written and saved in “results/window/window_predictions.conll”. The saved predicted results for the first 2 sentences are:

EU ORG O #(False)

rejects O O

German MISC ORG #(False)

call O O

to O O

boycott O O

British MISC MISC

lamb O O

. O O

Peter PER PER

Blackburn PER PERAuthor Luyao Peng

LastMod 2019-05-06